This is my first blog, to begin with, I recently started with Python and was assigned the web scraping task. I surfed through the whole of YouTube to find a concise summary of exactly what to do in web scraping. After spending hours, this is what I’ve learned and made it easy for my fellow readers.

Let’s get this started with…

Instead of picking up heavy websites with lots of HTML blah blah, choose a simpler one, like maybe a blog. I chose this, as my previous blog was written ages ago. You can refer to this: https://thegrowinginch.blogspot.com/

Let me put Web Scraping into simpler words for you -

There is a lot of data on some specific website

You want to access only a specific piece of information and work on it.

What will you do? The answer is of course extracting that information- Web Scraping. Now the question is — How to do it?

Remember the acronym — ETL [Extract, Transform, Load]

Now there are 2 ways how we can scrape the information

Using APIs

Using libraries — BeautifulSoap, Scrapy

Here, I am using BeautifulSoap.

You need to import the following libraries

import requests

from bs4 import BeautifulSoup

If you do not have them preinstalled, don’t worry

pip install requests

pip install bs4

The next step we have to do is to get our desired URL

EXTRACT

Get the HTML content from the URL

r = requests.get(url)

htmlcontent = r.content

soup = BeautifulSoup(htmlcontent, ‘html.parser’)

You must have obtained the HTML page source code as one sort of Tree, now we will proceed to parse this tree.

TRANSFORM

My blog contained a few attributes like — Title, Date of Posting & Number of Comments. This is what I wish to extract.

def transform(soup):

header = soup.title

print(“The title for my blog is :” , header)

#Find the div element which contains the required class and tags we wish to extract ahead

divs = soup.find_all(‘div’, class_=”post hentry uncustomized-post-template”)

#Traverse through the items we wish to extract

for item in divs:

title = (item.find(‘h3’, class_=”post-title entry-title”).text.replace(“\n”, “”))

date = item.find(‘a’, class_=”timestamp-link”).text.strip()

comments = (item.find(‘a’, class_=”comment-link”).text.replace(“\n”, “”))

#Add these attributes to a dictionary

blog = {

‘title’: title,

‘date’ : date ,

‘comments’ : comments}

bloglist.append(blog)

return

#Make blog list as a list and call the transform function

bloglist =[]

transform(soup)

print(bloglist)

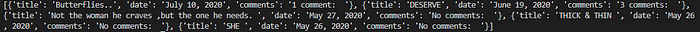

This will give you output something like this

LOAD

The easiest way to save the data is to load this obtained data into a CSV file

import pandas as pd

df = pd.DataFrame(bloglist)

df.to_csv(‘blogs.csv’)

This will simply create a CSV file and load the dataset into it. To refer to the source code, check out my GitHub.

Voila! You’ve successfully done web scraping.

Open to suggestions and comments. I am still a learner and will always be!